It could use a little work on the gradient, and some extra geometry wouldn't hurt. There are four sunbeams in this test scene. Each is just a plane (two triangles). I'm thinking of splitting the plane into thirds (vertically) so I can shape it more into a semi-circle, instead of just laying flat in the background. That might give it some depth, and help combat the problem with the right-most sunbeam looking so thin.

It was a pleasure being able to create something that looks so much different, without adding or changing anything in the renderer crate at all! That really proves out the programmable pipeline implementation. The shaders are self-contained, and using uniforms and attributes works exactly like expected. The sunbeam animations are simply done by passing a timestamp to the shader via the uniform. And I even shifted the timestamp slightly between each sunbeam so they weren't exact duplicates of one another. (Breaking up "the grid".)

Bug Fixes

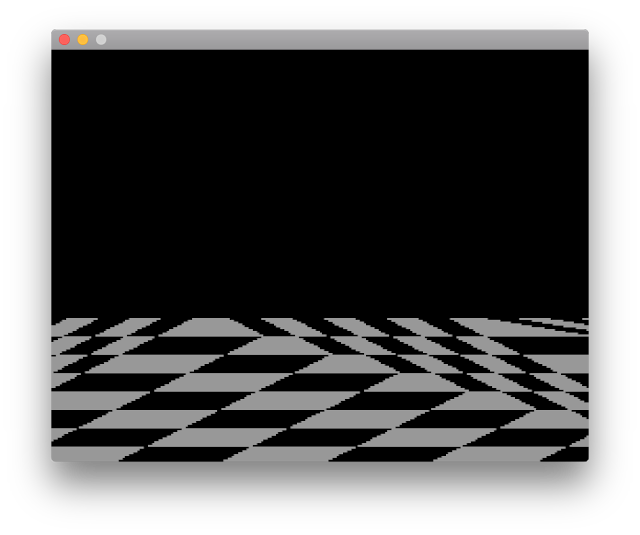

I was able to very quickly get the Blender export running within the renderer, as expected. However, I did spend a lot of time setting up the Blender camera to match the pixel aspect ratio, screen resolution, and field of view. This will be a crucial function in the coming stages of asset creation, as it gives me a way to frame the scene just as it will look in the game. I had a false start with this right away because the renderer was drawing a textured ground plane incorrectly. It was difficult to make out features in the texture when compared side-by-side with the same camera position in Blender.When I switched the texture to a simple checkerboard pattern, it dawned on me that the problem was with texture mapping.

|

| Affine texture mapping causes warping when a plane is viewed at an oblique angle |

This is technically called affine texture mapping. It's the same process used by the original Playstation, which you can notice in all games, especially on walls that seem to bend when they are nearly parallel with the camera direction. Affine texture mapping is cheap; it's simply the result of performing the mapping in screen space.

Fixing it was very easy, just a matter of dividing the barycentric fragment coordinates on the triangle by the w-component of each vertex. And then dividing again by the sum of the barycentric axes! The reasons for this are beyond my capacity to care, but you can read all about the maths behind it here and of course here.

|

| Perspective-correct texture mapping is more expensive, but visually appealing |

You can see some floating point precision errors right down the middle of the image, but I'm ok with that! With the texture mapping taken care of, I was able to render the test scene exactly as shown in Blender's camera view. That was a great feeling. It was confirmation that the effort put into pixel aspect ratios and gradient dithering is working like a charm.

A few other minor bugs were also fixed. There was an off-by-one error that caused the bounding box clipping to be too aggressive, making it unable to draw the right-most column and top-most row of pixels. Secondly, the discard return value from fragment shaders was not working correctly because the z-buffer was updated before calling the fragment shader. And finally, there was a problem with gradients with a first step position greater than 0. This one I knew of since I added the debug GUI; moving the first color step caused the gradient to invert for input values lower than the first position. All of these were trivial to debug and fix.

Adding a Third Shader

Sunbeams are cool and all, but I also want to add some water animation. It will be small pools of water collecting on the ground. Infrequent drips from the ceiling will disturb the pools, causing ripples. The player's feet will splash. You get the idea.

This will be more difficult to pull off than the sunbeams, because the reflection in the water will require either reading the frame buffer, or rendering the scene to a new render target texture. My test scene is too simple right now to make it worth rendering to a target. If I added a background and some more interesting rock structures on and around the floor of the cave, it would definitely be appealing to render the reflection from the water's point of view, for a more accurate reflection.

It's going to be pretty impressive. The only thing I'm worried about is runtime performance. Everything is very unoptimized at the moment, and the renderer is using much more CPU time with this small scene than I would like. Triangle rate isn't much of a problem, since I had about 5,000 tris in the two-heads scene, and CPU usage was about 40%.

Now I have 18 triangles using 65% of CPU time! It's clearly pixel-rate limited. I have some really awesome opportunities for optimization, though. Using some SIMD tricks, I should be able to make it at least 4x faster just by drawing 2x2 or 4x1 pixel blocks at a time. That assumes my MCU has NEON support... By the time I'm ready to put this thing on hardware, the common MCU should have everything needed.

Improving the Test Scene

So I guess fleshing out this test scene with more interesting geometry is how I will spend this next week. I'll get it setup for water pools, anyway, even if I don't get the shader fully integrated. I'll also add a background that isn't just pure black. The ground texture will have to change soon, as well. This was just the first decent thing I found with an image search, but it doesn't feel right for the camera position. And it doesn't fit my expectations for a cave.

The last part will be figuring out the lighting. Having more geometry will absolutely make the Gouraud shading instantly better. But I will also need realtime lighting beyond just the ambient light. You can expect to see all of these changes in the followup posts. As always, I'll update you next week.

Onward to LoFi 3D gaming!

No comments:

Post a Comment