I've been learning and using Rust for nearly four and a half years, since version 1.6. That's a good amount of time to become familiar with some of the troubles of the language, the tooling, and the ecosystem. But this is a slightly different story, this is a dissent to a common criticism that the compiler is slow. In this discussion, I will argue that these claims are misleading at best.

|

| rustc compiling a non-trivial application. |

There is plenty of evidence that suggests rustc is slow relative to compilers for other languages; Go can compile applications with similar complexity in 1/5 the time, and clang or gcc can do the same for applications written in C. It might be acceptable to justify that the Rust macro and type systems are significantly more complex than what is usually written in either Go or C, and that it warrants much heavier lifting by the Rust compiler. While there is some truth in that sentiment, I believe there is more to it than that. I believe it's more of a human problem than an algorithmic one.

I frequent URLO, helping answer questions from time to time. I mostly use it to absorb information, learning about the language and the greater ecosystem around it. I have also spoken out against bloat on occasion, and I would like to continue raising awareness through this article.

First, let's take a brief detour to examine software bloat in a historical context. The first thing that comes to my mind is Wirth's Law, which says in essence that software [developers] cancel out all advances in hardware performance by filling in the gaps. Read Niklaus Wirth's paper, A Plea for Lean Software, it is well worth the time investment. This is by no means a new or unique observation. Continuing to explore this path of "bloatware" any further would be a disservice to the reader as it strays from my primary point. But it stands as another example of the fundamental problem, that humans are enigmatic and human behavior is even less clear.

Most developers make a time tradeoff while writing software. The only real goal for a developer is to solve a particular problem. Say Mary is making a game; she doesn't have infinite time to spend on perfecting the game, but she can get something reasonable completed within a reasonable timeframe. Mary knows she has a finite number of remaining heartbeats, the currency that motivates life itself, so she will wisely spend those heartbeats by ignoring minutiae to focus on the problem at hand. This isn't a bad thing! A game is produced, and she can go on to work on her next big hit.

But what does it say of the internals of the product? Does it do anything wasteful? Could it be made a little more efficient? Use a little less energy (especially battery power)? Surely it can! Mary doesn't have time to make it perfect. And that's really the story I want to tell.

My Story

The reason I'm describing this hypothetical game developer and her software is because I've done this myself many times. I write code and when I'm satisfied with how it works, I move on to something else leaving a large number of breadcrumbs along the way. Anyone could look at the situation and exclaim, "Gee, what a mess! Who would do this?!"

A surprising amount of my code that is currently running in a production environment is Python. It is relied upon by millions of people every day, and it is extraordinarily expensive to operate. Lesson learned is that though the language choice may have allowed me to rapidly evolve a project from conception to production, the long term deficits are not at all worth what little was gained early on. What do you do with multiple projects that are written in an objectively slow language, using objectively bad dynamic type transformations? You run them under a JIT compiler, of course! If it has to be objectively slow, we can at least regain some of the gross efficiency loss with a little aggressive compiler optimization.

And this leads to my point, at long last. Software developers, being imperfect and making wise decisions about how to spend their heartbeats, build software by leveraging software written by those who came before them. Pick a language and compiler here, add a smidge of libraries and dependencies there, mix it all together and you got yourself one deliciously solved problem. But of course, not before you create at least one, perhaps many other problems. Importantly, these decisions are not made out of laziness, but of necessity.

Enough praise cannot be given to the developers who put heroic amounts of effort into optimizing their code. Rigorously benchmarking and profiling, paying keen attention to where CPU cycles and heap blocks are going, and reigning in overly expensive code paths. We see this in many places from the regex crate to the actix framework, all the way down into the compiler itself. So many positive and impressive feats, it's always inspiring.

So if the compiler is actually quite good already and has a team dedicated to making it faster, why does it seem so slow to so many Rust developers? My suspicion is that this conception is mostly self-imposed by developers who are quick to take advantage of just how effortless it is to bring in dependencies. There is almost no resistance to depending on an argument parsing crate -- and this is where things might get a little controversial -- which with all of its dependencies takes up 400 KiB in the executable (after stripping debug symbols). 400 KiB of your binary size goes to argument parsing? Oh well, I can let that slide; 400 KiB costs nothing these days.

But what about compile time? What does that cost? If you value your heartbeats, you probably dislike that clap takes so long to compile. On my laptop, the 20_subcommands example took 1 minute and 14 seconds to compile. And the example doesn't have any business logic at all. It's entirely just a command line parser. But is the compile time substantial in practice? Surely clap will be used in a real-world application where argument parsing is only done within the first few dozen milliseconds or so when the program is started. The majority of the application logic is going to be far more costly, right? Right?

A Case Study

One of my side projects is learning digital logic with an inexpensive FPGA board. A handy tool for testing digital logic is a waveform viewer, and I was able to find a very simple one a few weeks back: dwfv. It runs in the terminal, presents a TUI, has editing support, and vim key bindings. Neat! The first thing I noticed about it is that compiling the release build took almost two and a half minutes. Not an ideal first impression, but there it is.

As I played with it, I realized this is probably a tool I will be keeping in my back pocket. So it's worth helping out, contributing to open source and all that. My first PR was (surprise!) replacing clap with gumdrop. Now, I've had some success with gumdrop in the past, so I knew what I was getting into. It isn't always possible to provide exactly the same CLI that clap will provide, and this was no exception. The position filename had to change into an optional argument so that free args could be used for the subcommand syntax. A very reasonable tradeoff, in my opinion, for reducing the compile time by 30 seconds. Bonus; the executable size was also reduced by 500 KiB.

Alright, I'm sorry for picking on clap. I know it has its benefits over simpler argument parsing crates. If I had my druthers, I would just use the environment instead of argument parsing at all. Real talk, argument parsing is more human friendly, and the 12-factor app guidelines are mostly targeted to microservices anyway. Also clap does have a lot of expressive power, I can't discount that.

The next thing I replaced was the parser generator, lalrpop with nom. I had looked at both of these briefly while trying to decide on how to write a parser for an assembler project that I planned to RIIR. I settled on nom for that project, and it was overall a very good experience. It also turned out to be a good change for dwfv, which retained full syntax compatibility and reduced the build time to about 40 seconds. The executable size was only reduced by 32 KiB this time.

Finally, I was able to replace regex with some simple iterator-based parsers, reducing the build time to 26 seconds, and astonishingly reducing the executable size by a whopping 1.14 MB. The overall before-and-after looks like this:

| Before | After | |

| Compile time | 2m 22s | 26.63s |

| Binary size | 2.55 MiB | 942 KiB |

| Dependencies | 105 | 22 |

Times captured with rustc 1.46.0-nightly (826cb062a 2020-06-05) on Intel(R) Core(TM) i7-7920HQ CPU @ 3.10GHz.

This represents a significant improvement in compile time, with the latest PR being more than five times faster than when I first discovered the application. Non-trivial benefits are also seen in fewer number of dependencies and a smaller executable size. All without losing any features, and with only a very minor change to the CLI argument options.

Wrapping Up

This was a very interesting project from many perspectives, and I'm happy to get the chance to share my findings. What I've tried to show here is that a lot of what some might consider to be a "slow compiler" can be traced back to a developer not being diligent with dependency management, or preferring runtime performance over compile time, or even "developer velocity" over compile time (whatever that means!) Remember what I said about Python and its ability to allow developers to rapidly build things? Sometimes a regular expression feels that way. Hidden costs are always a surprise.

This is not always the case, however. There will be times that you must trade compile speed for runtime performance. Those should be the exception, not the rule. Most Rust developers can reduce their compile times with just a little nudge to compile less code.

The tools used for this analysis were cargo tree (included in cargo starting with Rust 1.44!) and cargo-bloat. Finding alternative crates is not always easy, and I don't have any general recommendations for anyone wishing to attempt any similar work. The "dependencies" statistics shown for crates on lib.rs can help provide a basis for comparison if you know your options and just need to weigh them.

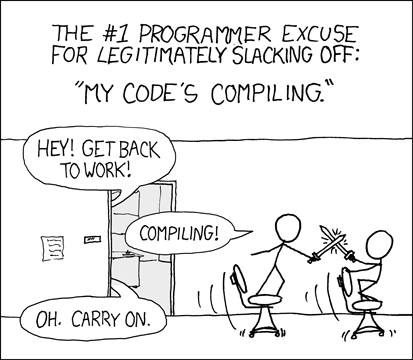

I would also be remiss to ignore the elephant in the room.

|

| xkcd #303 (CC-BY-NC 2.5) |

I guess it's not just the Rust compiler, after all!

Last edited 2020-06-16 for typographical errors and fixing the xkcd image.

7 comments:

2.55 MiB - a whopping 1.14 MB != 942 KiB

You're right. The other two patches reduced the file size by 524 KB and 31 KB respectively. I did screw up the numbers in the table which should read 2.68 MB and 965 KB.

The total difference is about 1.72 MB.

For other eagle-eyed readers, there was a fourth patch that reduced the file size by another 23 KB, but it had nothing to do with improving the build times.

If a minimal executable size is the main goal rather than compile time, you can try enabling LTO, as it removes dead code among other things.

Thanks for sharing your experience! But I wonder how much of a trouble this compilation time to you.

As far as I know, dependencies compile only once, so when you work on a project, you only recompile your own code. During first compilation you can go get yourself a cup of coffee, it's not like hours, only minutes in your example. Is this first experience that much important to you?

Yes, it does, because the clean build time starts mattering a lot in production build environments for non-trivially complicated software systems.

With the parser replaced, have you measured if that results in runtime performance changes? VCD files can be huge

@Vlad, I have not! But if runtime parsing of VCD files was a primary concern, I would probably approach it by replacing the parser entirely with one that can be lazily queried. For some amount of "huge", doing all parsing ahead of time is going to end up perceptively slow. Doing the work only as necessary (e.g., "just in time") will improve perceptive performance by amortizing runtime cost over a much larger time window. But I have not explored these options, myself.

Post a Comment